In today’s hyper-competitive digital landscape,...

Change Data Capture (CDC)

Traditionally migrating data changes between applications were implemented real-time or near real-time using APIs developed on source or target with a push or pull mechanism, incremental data transfers using database logs, batch processes with custom script etc. These solutions had drawbacks like

Now, let us setup the docker containers to perform CDC operations. In this article we would be focusing only on insert and update operations.

Now, let us setup the docker containers to perform CDC operations. In this article we would be focusing only on insert and update operations.

- Source and target system code changes catering to specific requirement

- Near real-time leading to data loss

- Performance issues when the data change frequency and/or the volume is high

- Push or pull mechanism leading to high availability requirement

- Adding multiple target applications would need a larger turnaround time

- Database specific real-time migration was confined to vendor specific implementation

- Scalability of the solution was time and cost intensive operation

- Moving data changes from OLTP to OLAP in real time

- Consolidating audit logs

- Tracking data changes of specific objects to be fed into target SQL or NoSQL databases

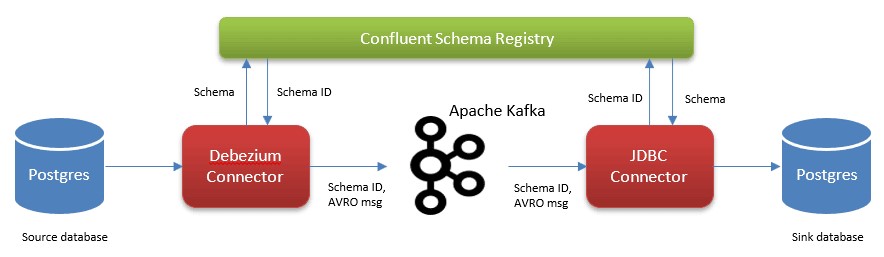

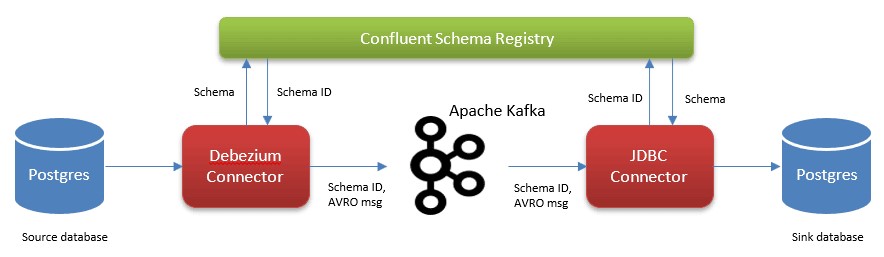

Overview:

In the following example we would be using CDC between source and target PostgreSQL instances using Debezium connector on Apache Kafka with a Confluent schema registry to migrate the schema changes onto the target database. We would be using docker containers to setup the environment. Now, let us setup the docker containers to perform CDC operations. In this article we would be focusing only on insert and update operations.

Now, let us setup the docker containers to perform CDC operations. In this article we would be focusing only on insert and update operations.

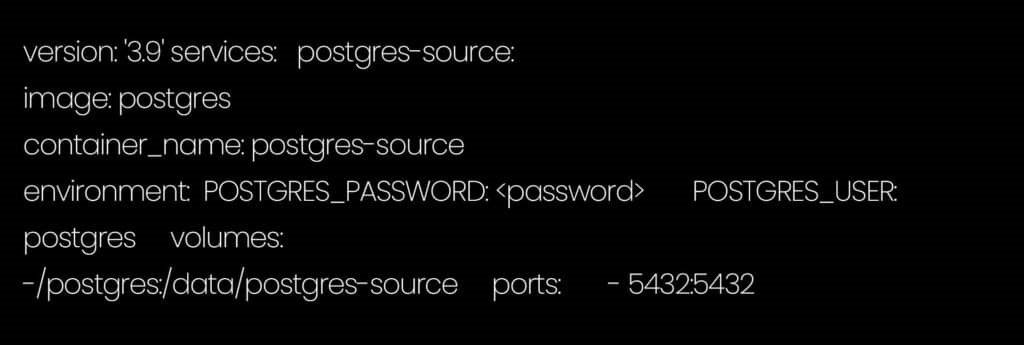

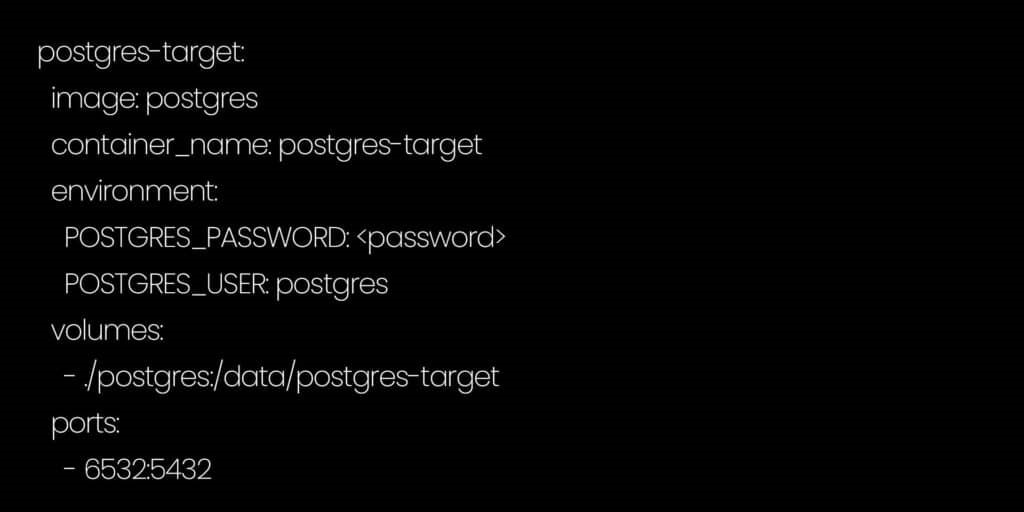

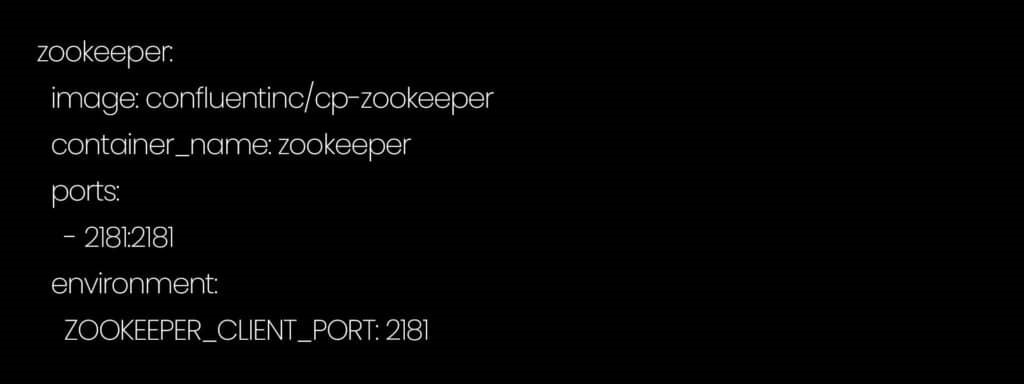

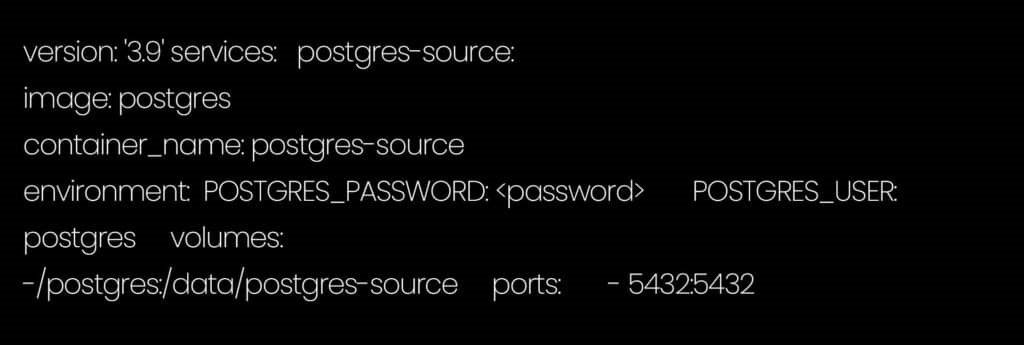

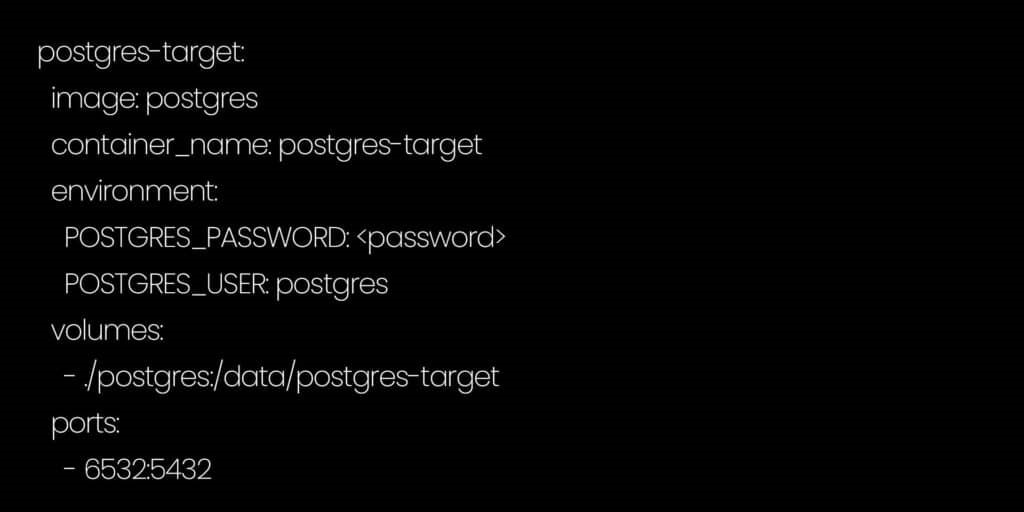

Docker Containers:

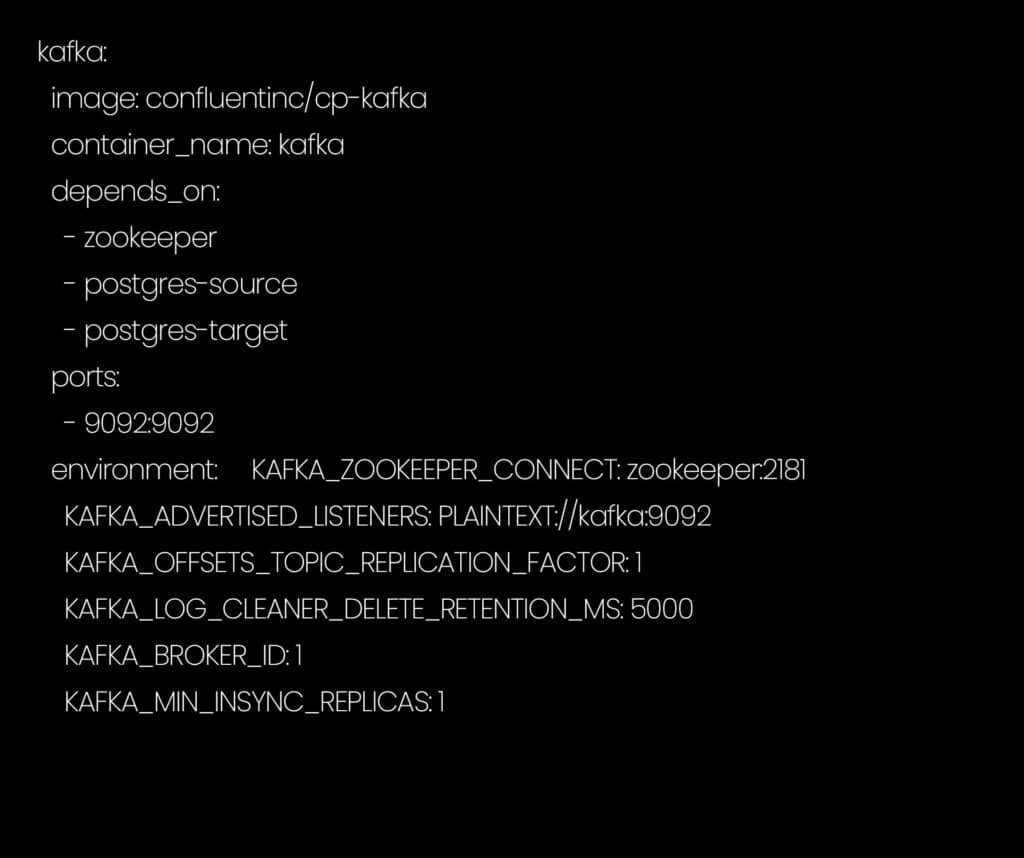

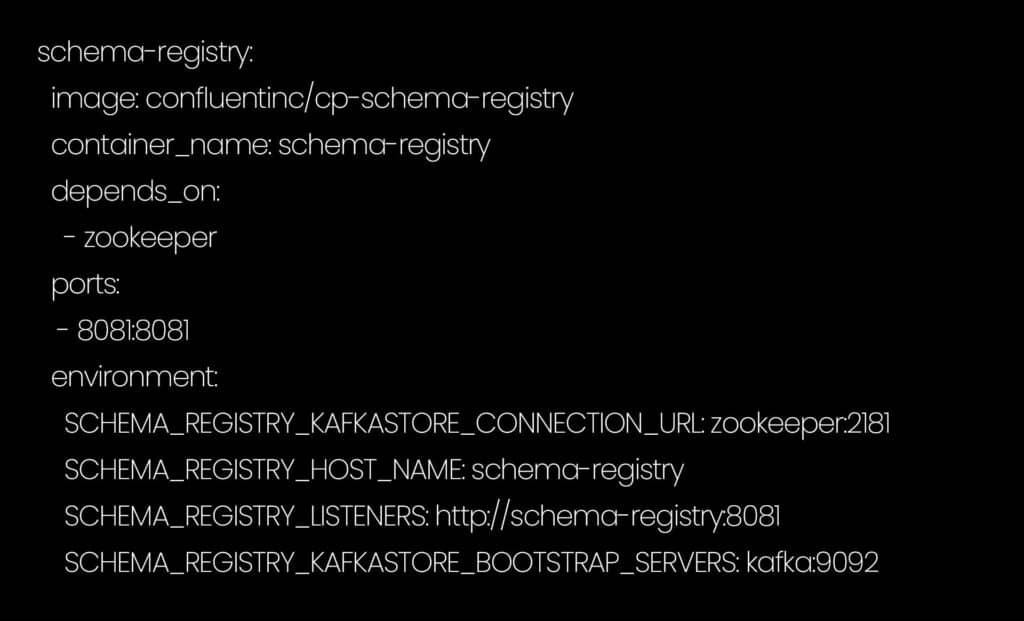

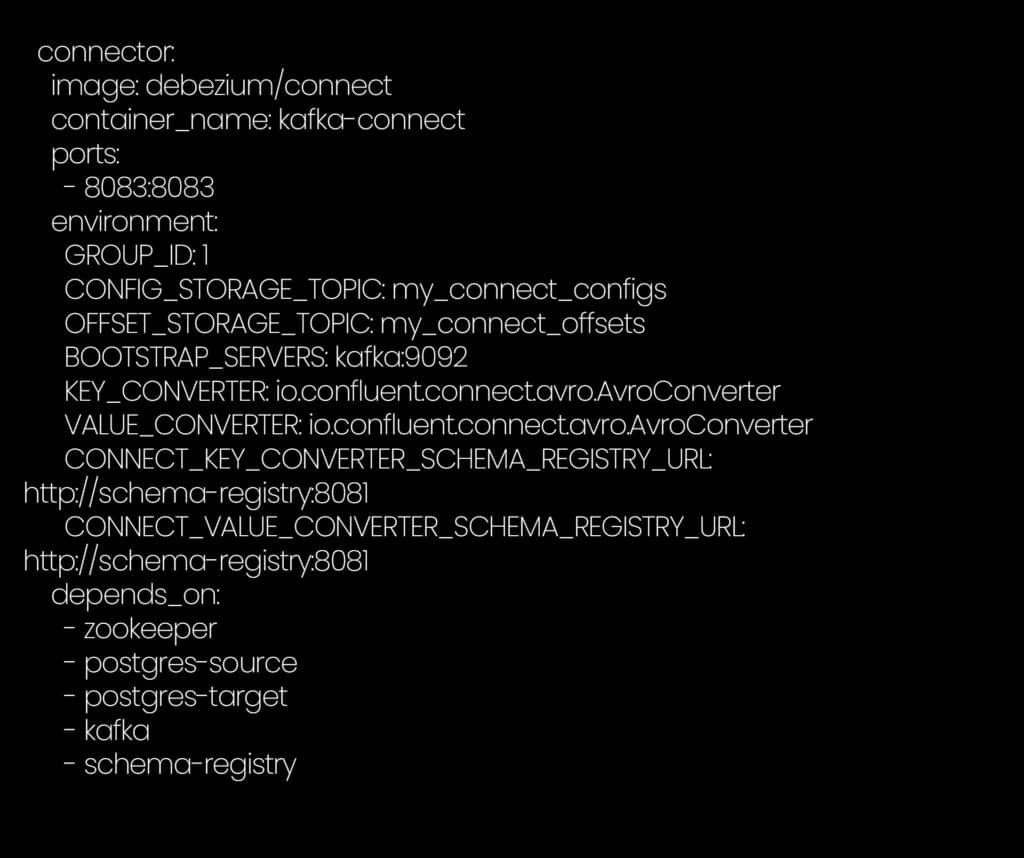

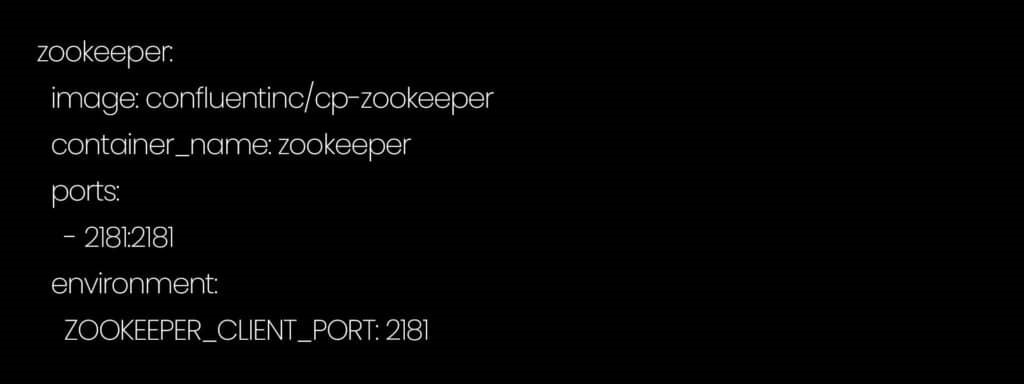

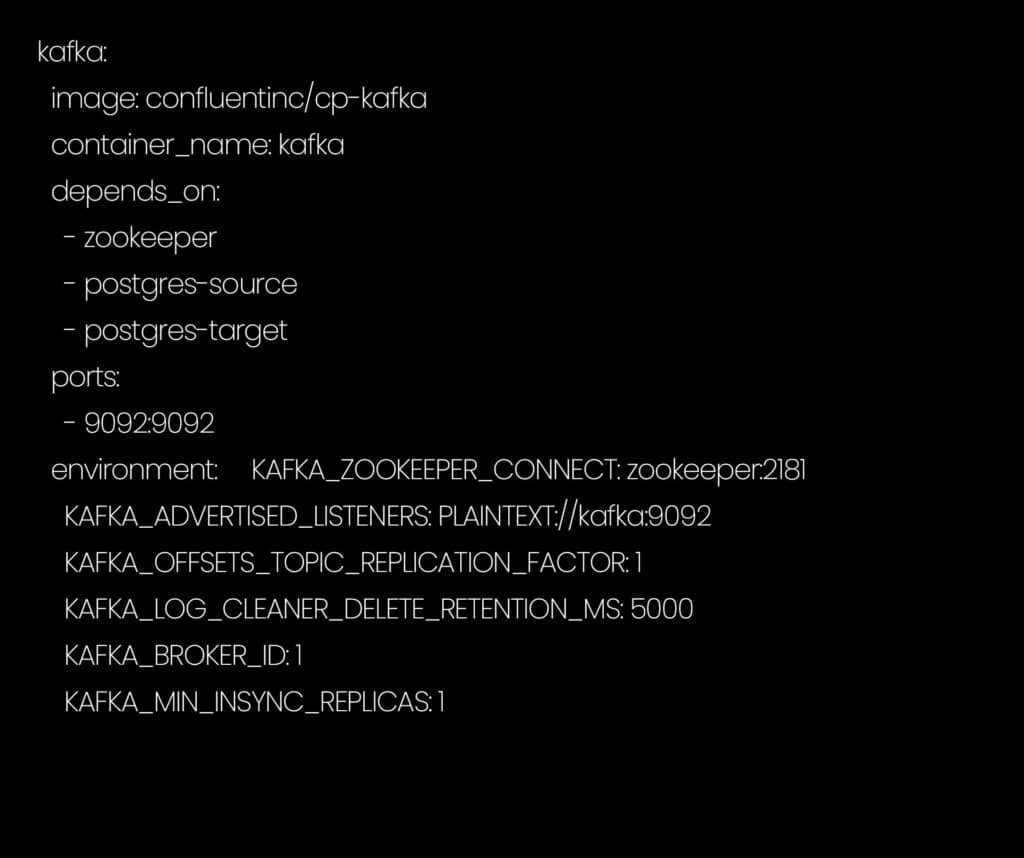

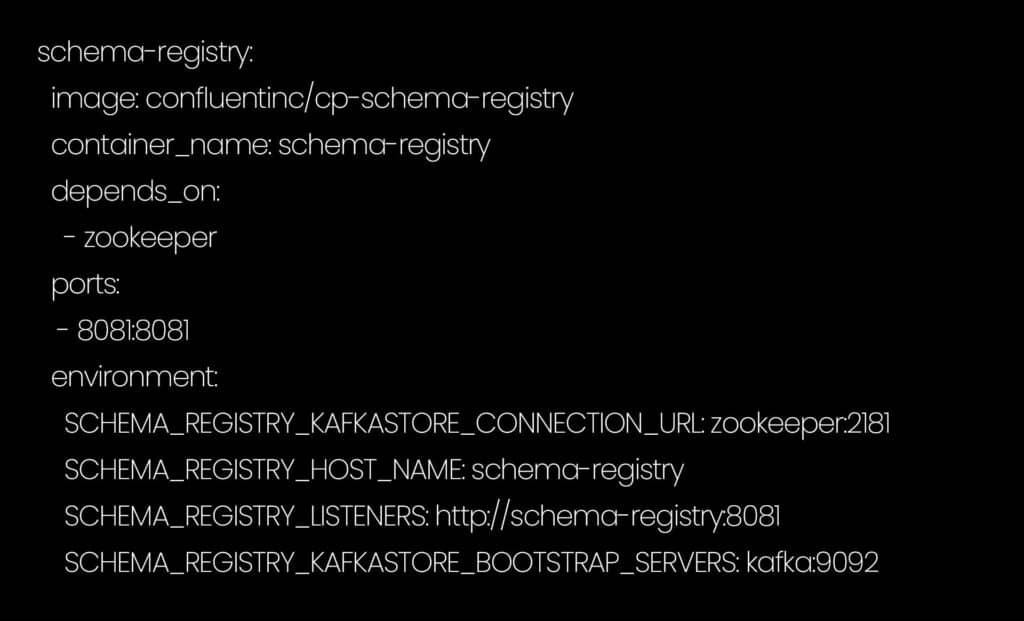

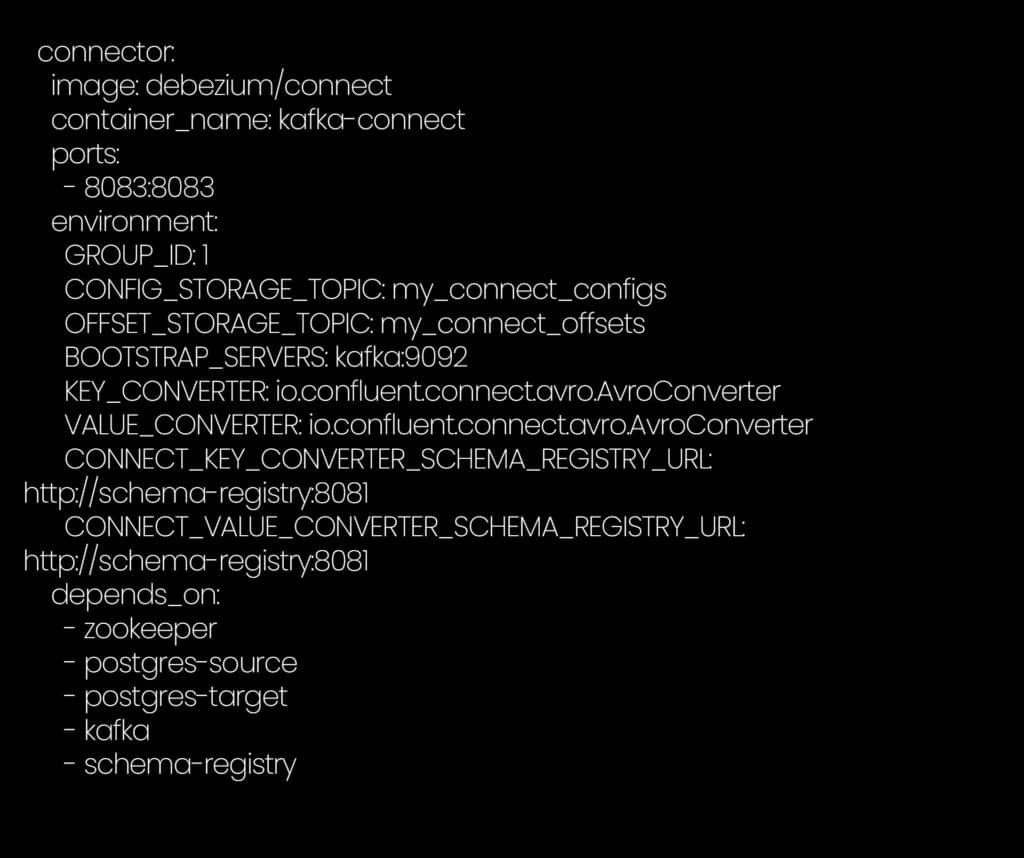

- In a Windows or Linux machine, install Docker and create a docker-compose.yml file with the following configuration.

- Run the docker-compose.yml file by navigating to the directory in which the file was created using command prompt or a terminal and run the below command.

Debezium plugin configuration:

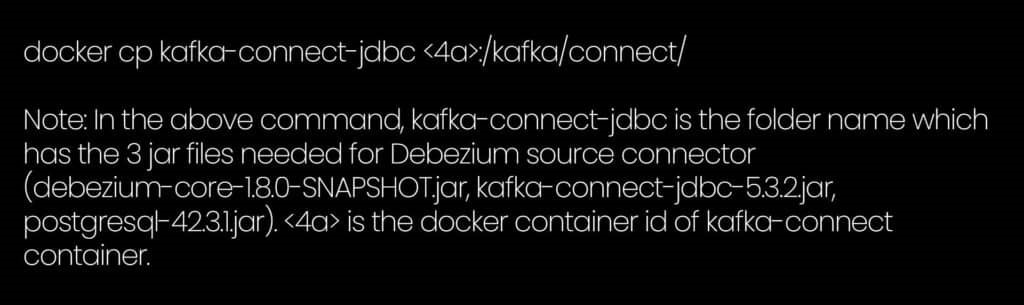

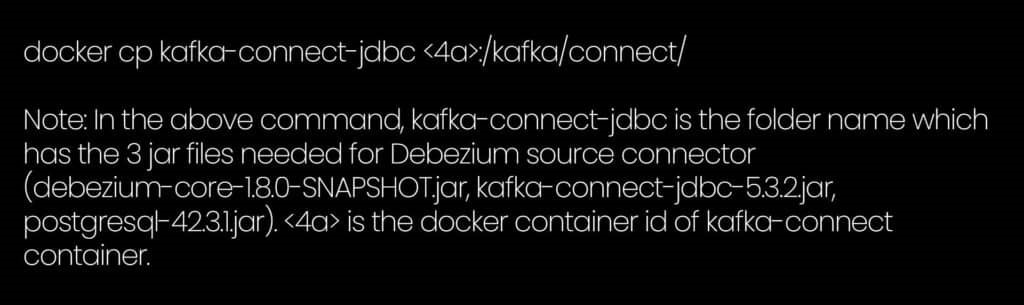

- Once the docker containers are created, we need to copy Debezium kafka connect jar files into the plugins folder using the below command.

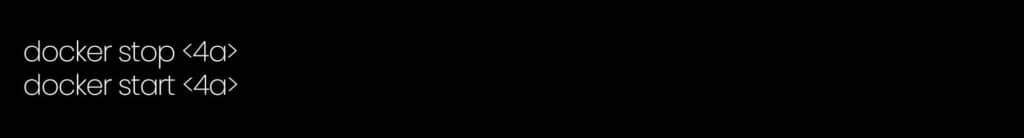

- Restart the kafka-connect container after the copy command is executed.

Database configuration:

- Connect to the postgres-source and postgres-target databases using psql or pgAdmin tool.

- Create a database named testdb in both the servers.

- Create a sample table in both the databases.

- In postgres-source database execute the below command to change WAL_LEVEL to logical.

- Restart the postgres-source docker container using the docker stop and start commands.

Source Connector:

Using any of the REST client tools, like Postman, send a POST request to the following endpoint with the below mentioned body to create the source connector. Endpoint: http://localhost:8083/connectorsGet In Touch

Quick links

Privacy Policy | Copyright ©2025 Cognine.